AI systems thatactually ship—and keep working

What we build

Replace hold times with real conversations

End-to-end engineering for realtime voice systems that handle tens of thousands of simultaneous calls. We orchestrate STT, LLM, and TTS pipelines, integrate with CRMs and support tools, and ship evaluation harnesses so teams can monitor tone, accuracy, and compliance at scale.

- LiveKit, SIP, and global telephony integrations

- Configurable model stack across OpenAI, Anthropic, Groq, or private LLMs

- Campaign analytics, containment metrics, and QA automation

Turn messy data into growth fuel

We build ingestion, processing, and enrichment pipelines that consolidate millions of records from scraped, partner, and internal sources. Deduplication, taxonomy building, quality scoring, and AI-assisted tagging turn raw data into reliable growth fuel.

- Large-scale web scraping and ingestion

- AI-driven merging, classification, and deduplication

- Operational dashboards and SLAs for ongoing data quality

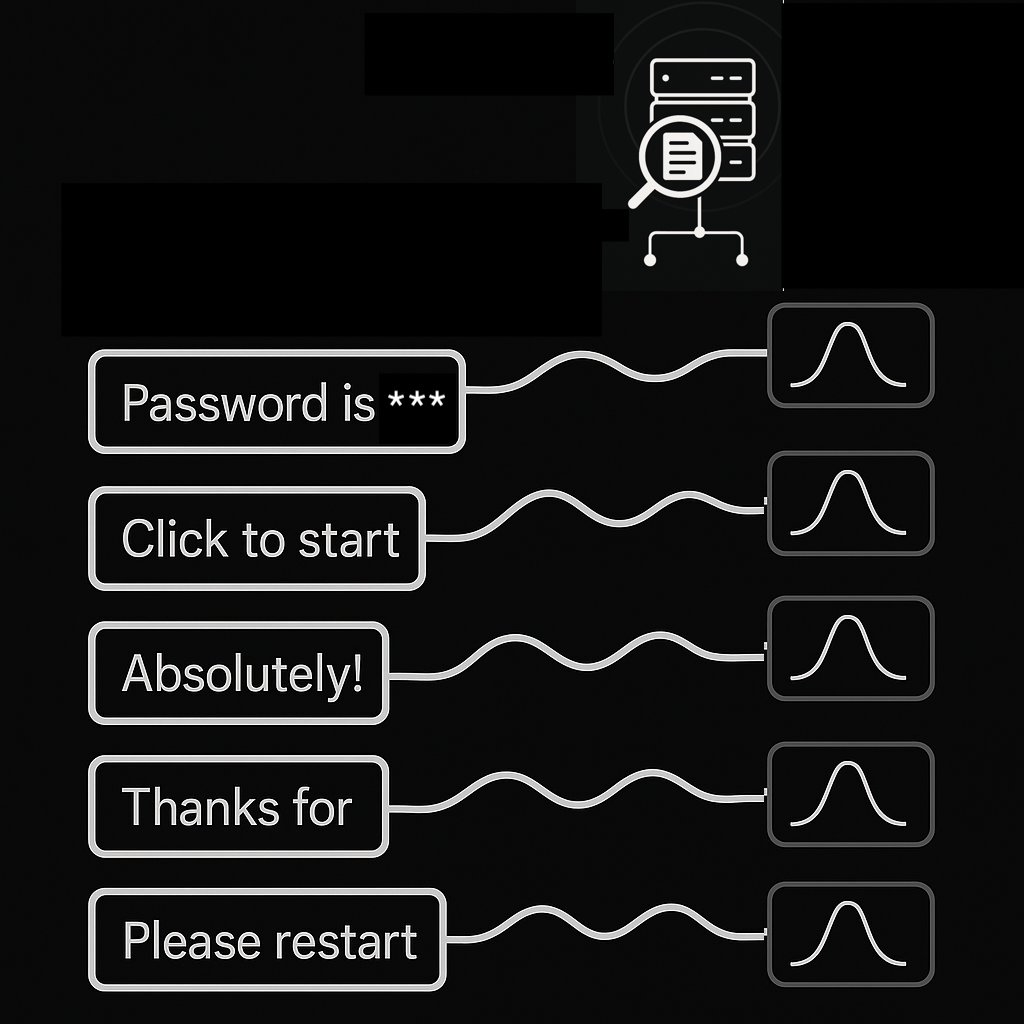

Agents that do the work, not just chat

AI agents that take natural language requests and drive action— connecting to various tools like CRMs, databases, data stores, REST APIs, RAG tools or MCP tools. AI agents can be used for a variety of use cases such as customer support, sales, marketing, product development, operations, and more.

- Task orchestration and tool integrations

- Retrieval-augmented prompts grounded in proprietary data

- Evaluation suites to measure productivity impact

Speed up R&D without adding headcount

Specialist copilots that assist CAD designers, analysts, and CAE engineers across ideation, simulation, and reporting. Agents plug into design and R&D processes to automate the discovery, ideation and product development cycles using internal repositories of knowledge. This helps organizations to move with iterating faster.

- Connects with knowledge hubs: PLM, CRMs, etc.

- Product development orchestration: CAD, CAE, etc.

- Role-aware workflows between engineering teams

Run LLMs on your terms

For teams with strict privacy or latency needs, we design, deploy, and operate self-hosted model stacks. Hardware sizing, secure inference servers, RBAC, usage analytics, and CI-integrated evaluation come bundled so internal builders can ship safely.

- Hardware advisory and capacity planning

- Optimised inference services with audit trails

- Centralised observability and evaluation harnesses

See what matters, in real time

We craft computer-vision models that run on constrained hardware for tasks like target acquisition and inspection. The stack spans dataset preparation, model optimisation, and deployment pipelines tuned for latency and reliability in the field.

- Segmentation and detection pipelines

- Edge deployment with quantisation and acceleration

- Telemetry to monitor performance in live environments

Customers love to work with us!

Latest Insights

Stay updated with our latest articles on AI, technology, and industry trends

Get in Touch

Have a use case in mind? Tell us what you're trying to solve and we'll share how we'd approach it—no pitch deck, just a direct conversation.