How to create a MCP Server - An Introduction using SEC EDGAR API

The need for MCP Servers

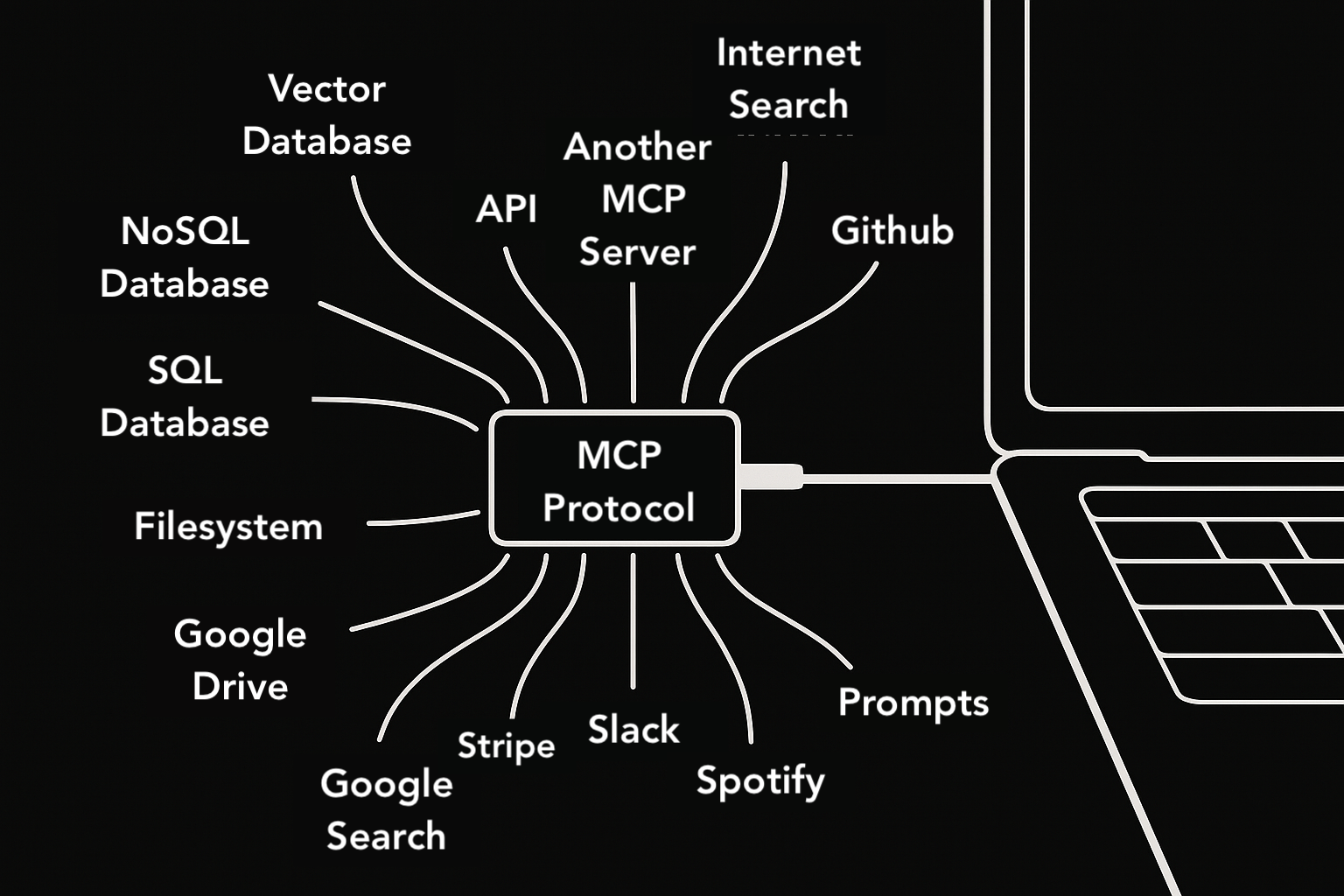

MCP servers offer a great way to interact with the resources/data. Essentially, think of MCP being a "USB-C port for AI applications". This is true for any data - be it stored in a database or be it exposed through API or a filesystem like Google Drive.

MCP Protocol is like a "USB C port for AI Applications"

Another great reason for building an MCP server is the ability to build AI agents using MCP. MCP allows for exposing the tools in MCP easily to LLMs. Think of a human with MCP servers being the tools. You tell this human "Give me the total earnings per share for NVIDIA for last quarter and analyse the market risks" and provide tools like SEC API to base their analysis on. We used a MCP server for Postgres Database to build an agent like that and the results were pretty good. Read here for more details.

How to build MCP Servers - Case Study of SEC EDGAR API

First step is to create an account for using SEC API here. You can obtain the token for using their API. They have a detailed documentation of all APIs here. For our MCP server example, we will pick their full text search API (link here).

Understanding SEC Full-Text Search API

The SEC Filing Full-Text Search API enables searches across the full text of all EDGAR filings submitted since 2001. Each search scans the entire filing content, including all attachments, such as exhibits.

Some example questions you can ask:

- "Supply chain disruption" as query and "0000320193" as cik (Apple).

- "Restructuring plan" as query and "8-K" as formtype.

- "OpenAI" as query with "0000789019" as cik (Microsoft).

Building Your MCP SEC Agent

Create a uv managed project and add MCP to it.

uv init mcp-server-demo

cd mcp-server-demo

uv add "mcp[cli]"Create "server.py" file.

from mcp.server.fastmcp import FastMCP

from sec_api import FullTextSearchApi

from dotenv import load_dotenv

import os

import json

import sys

# Create a .env file and add your SEC API key there

load_dotenv()

SEC_API_KEY = os.getenv("SEC_API_KEY")

fullTextSearchApi = FullTextSearchApi(api_key=SEC_API_KEY)

# Create an MCP Server

mcp = FastMCP("SEC API Server")Add full text search as a tool

@mcp.tool()

def full_text_search(

query_text: str,

form_types: Optional[List[str]] = None,

start_date: Optional[str] = None,

end_date: Optional[str] = None,

ciks: Optional[List[str]] = None,

page: Optional[str] = "1"

) -> str:

"""

Search SEC filings using full-text search capabilities

Args:

query_text: Text or keywords to search for (e.g., "substantial doubt" OR "material weakness")

form_types: List of SEC form types (e.g., ["10-K", "10-Q", "8-K"])

start_date: Start date in YYYY-MM-DD format

end_date: End date in YYYY-MM-DD format

ciks: List of company CIK numbers to filter by

page: Page number for pagination (default: "1")

"""

try:

search_parameters = {

"query": query_text,

"formTypes": form_types,

"startDate": start_date,

"endDate": end_date,

"ciks": ciks,

"page": page

}

# Remove None values from parameters

search_parameters = {k: v for k, v in search_parameters.items() if v is not None}

results = fullTextSearchApi.get_filings(search_parameters)

# Format the response with total count and filings

response = {

"total_results": results.get("total", {}).get("value", 0),

"page": page,

"filings": results.get("filings", [])

}

return json.dumps(response, indent=2)

except Exception as e:

error_msg = f"Error searching filings: {str(e)}"

print(error_msg, file=sys.stderr)

return error_msgCall the function

if __name__ == "__main__":

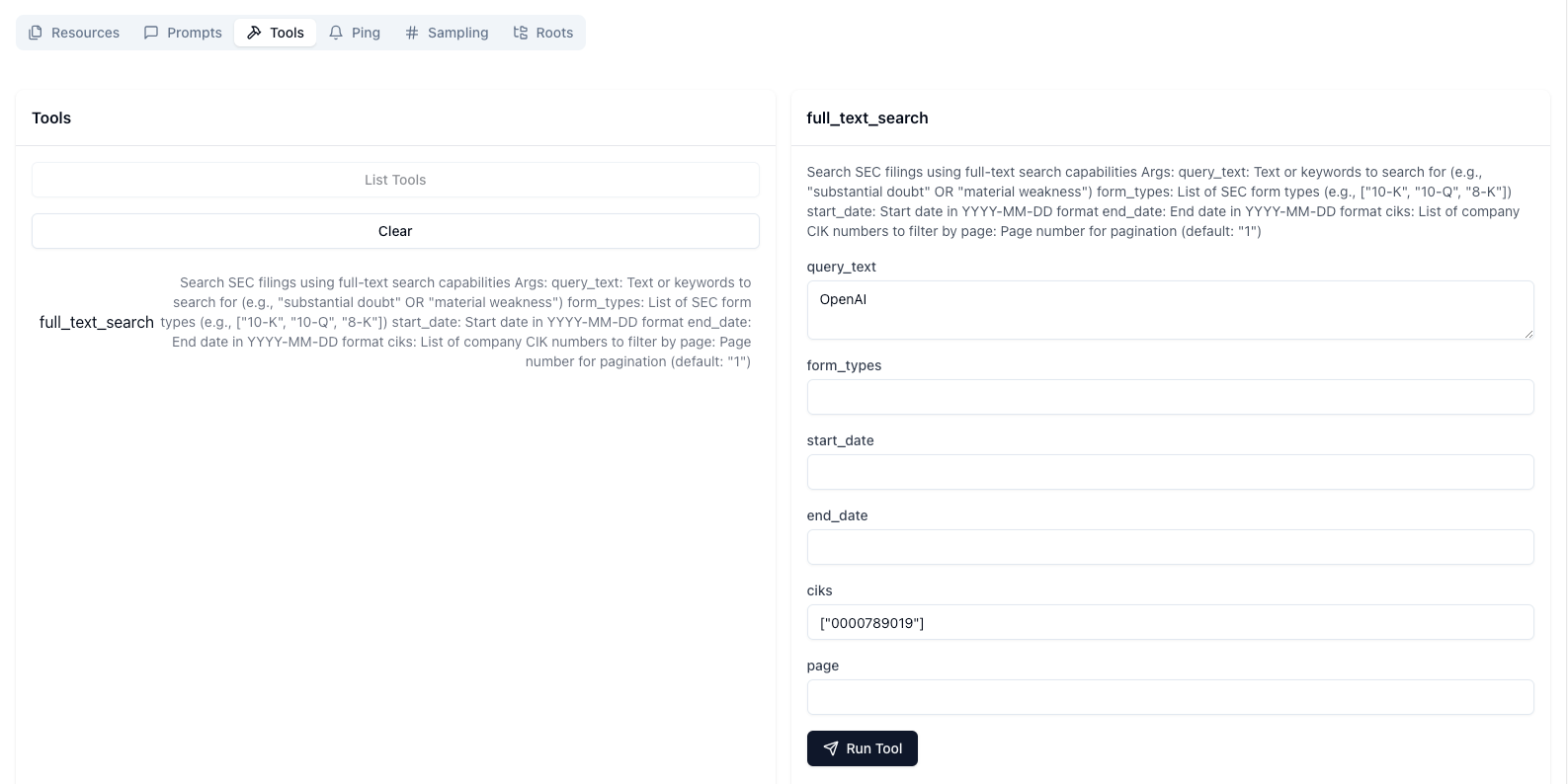

mcp.run(transport='stdio') The MCP server is ready. Before we run it, we can use MCP inspector to inspect it.

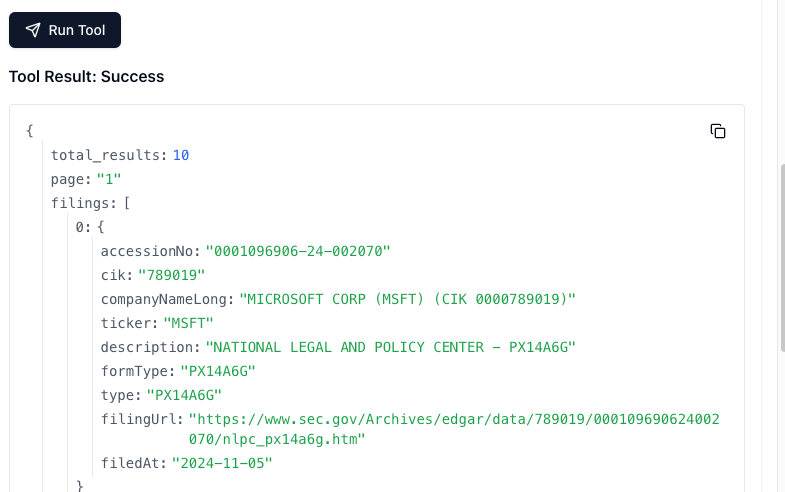

mcp dev server.pyBy default the MCP Inspector runs on the port 6274 and you can run the tools to check the output. One example query is run below for query "OpenAI" with the cik for Microsoft.

MCP Inspector

First output of Full text search

Next Steps

You can easily add this as a tool in an MCP Agent, ask natural language questions and get responses based on the SEC data.

————————————————————————————————————

Thanks for reading! If you have any questions or feedback, please let me know on X or LinkedIn.